Building a Furniture Classifier

Source code kept private to respect Drexel’s academic integrity policies.

A Quick Demo:

Dataset:

The dataset consists of a series of images from Ikea’s furniture catalogue. Each image is a single bed, chair, sofa, or table with a white background. Provided with a sample classifier and an autoencoder, I was tasked with modifying them to build a set of stacked autoencoders and a final classifier. Because certain images from the could reasonably be mistaken as others, I aimed for the accuracy of the final classifier to be arond 95%.

Autoencoders:

The autoencoders reproduces the input as output. It consists of an encoder, which transforms the input into a latent representation, and the decoder, which transforms the latent representation into the output. This output should be in the same form as the input. Three autoencoders with the same layers are stacked to create a classifier network for the final classifier.

Encoder

The encoder needs to do a single convolution + max pooling. Using the Torch module, we layer them into the encoder. The dimensions of the second encoder are one-half of the first encoder, and the dimensions of the third encoder are one-half of the second encoder.

The second and third autoencoders consist of the same layers, but the data is encoded by the prior enconder(s).

self.encoder = Model(

input_shape=(self.BATCH_SIZE, 3, dimension, dimension),

layers=[

nn.Conv2d(3, n_kernels, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2),

]

)

Decoder

The decoder needs to undo the convolution, so we layer the operations below into it.

self.decoder = Model(

input_shape=(self.BATCH_SIZE, n_kernels, dimension/2, dimension/2),

layers=[

nn.ConvTranspose2d(n_kernels, 3, kernel_size=3, stride=2,

padding=1, output_padding=1),

nn.Sigmoid(), # nn.RelU() for the second and third decoders

]

)

Training

Each of the autoencoders are trained for 100 epochs.

> python3 ae1.py 100

> python3 ae2.py 100

> python3 ae2.py 100

Classifier:

The final classifier has the following layers, with each of the three encoders serving as a layer:

layers=[

self.encoder1,

self.encoder2,

self.encoder3,

nn.Flatten(),

nn.Dropout(p=0.1),

nn.Linear(n_kernels * 8 * 8, 256),

nn.ReLU(),

nn.Dropout(p=0.1),

nn.Linear(256, 64),

nn.ReLU(),

nn.Linear(64, 4),

]

The classifier is trained for 100 epochs:

> python3 cl1.py 100

The Final Results:

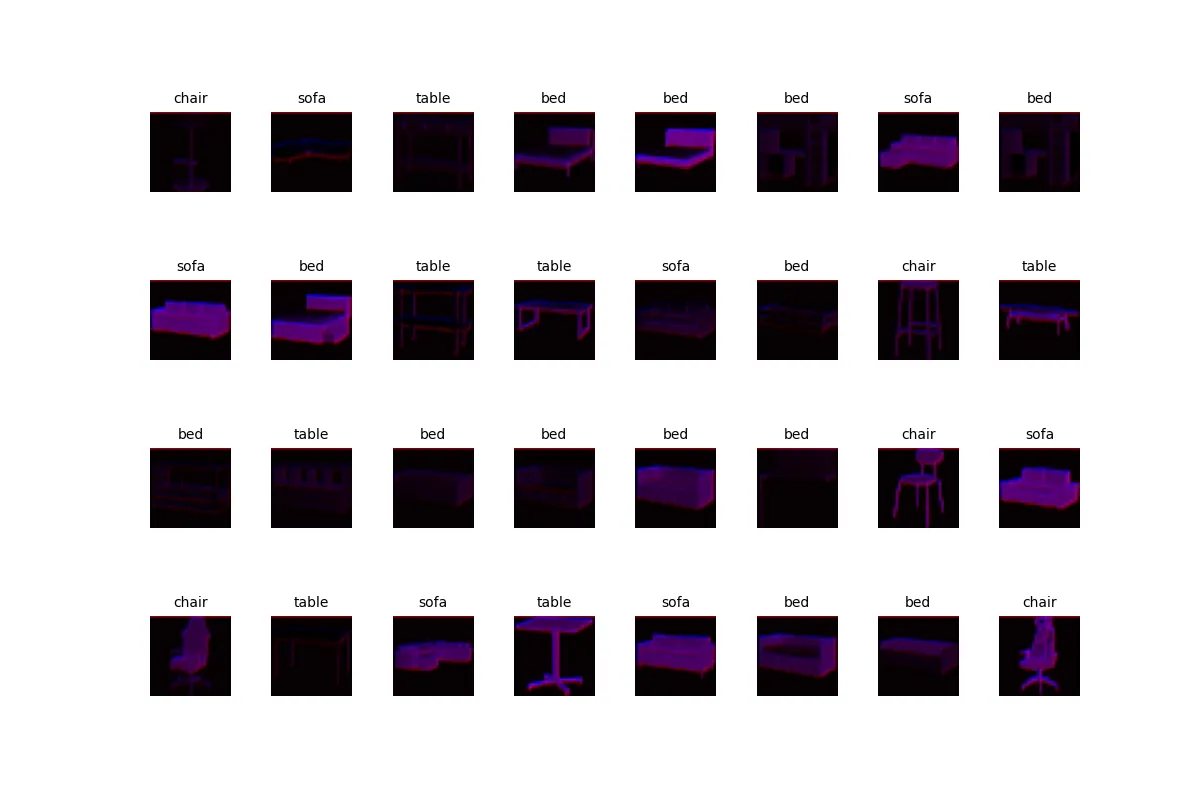

Autoencoder #1

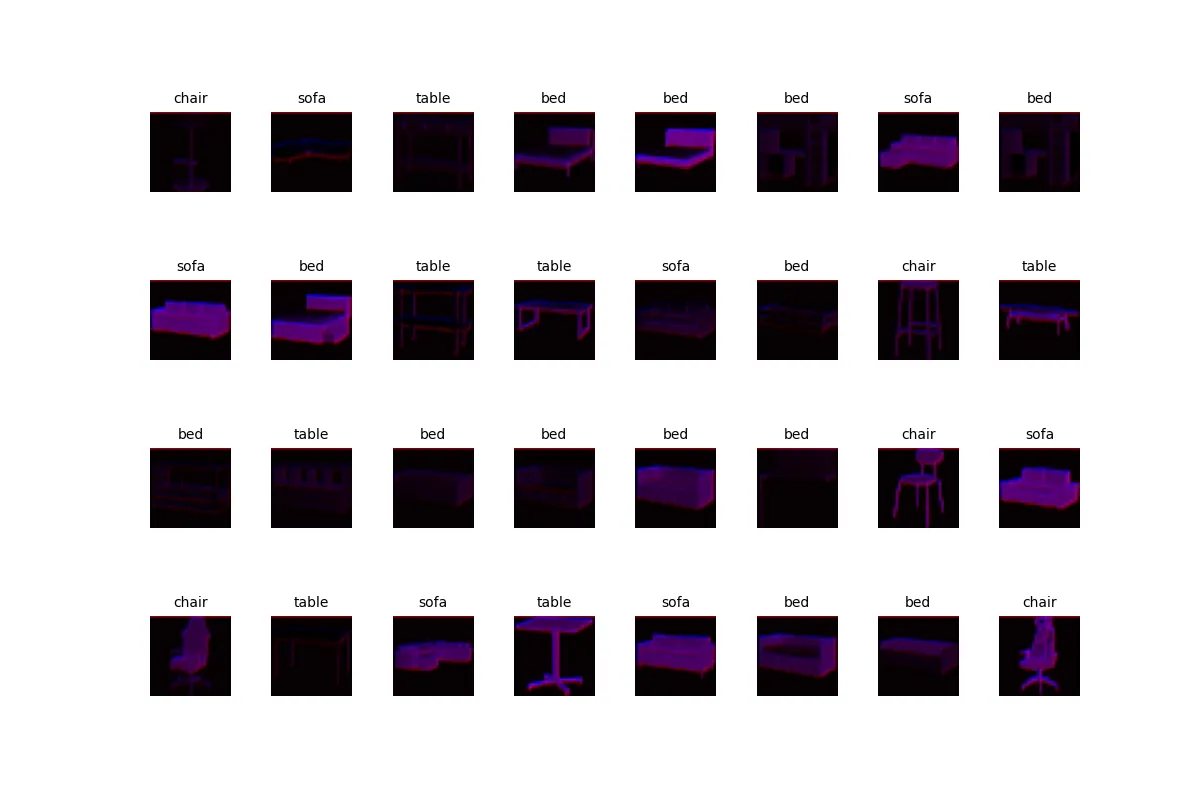

Autoencoder #2

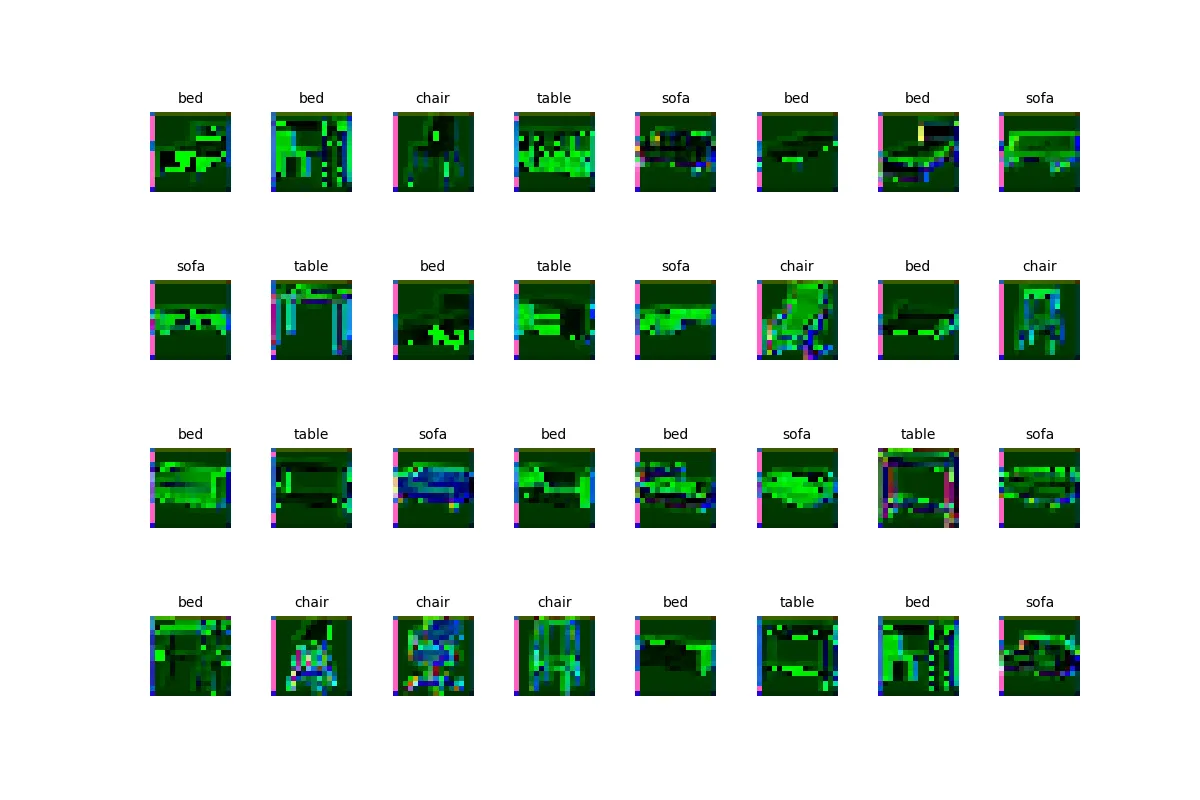

Autoencoder #3

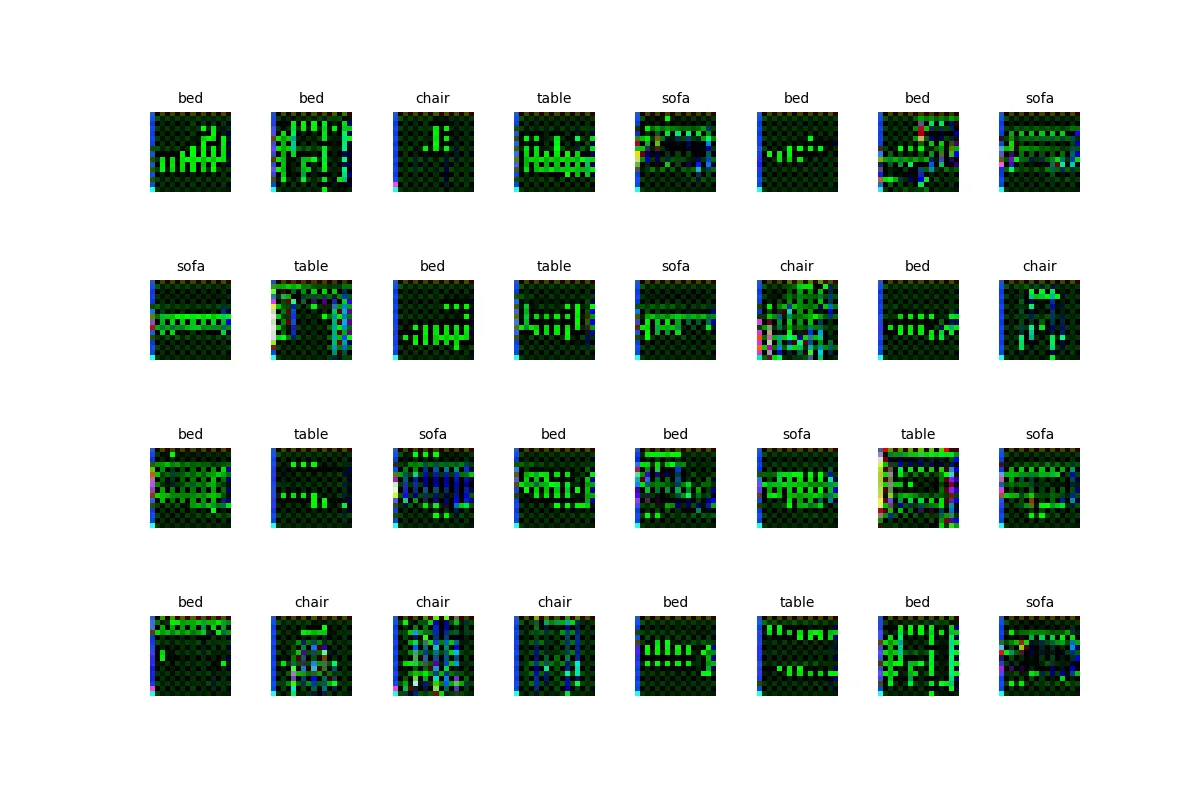

Classifier